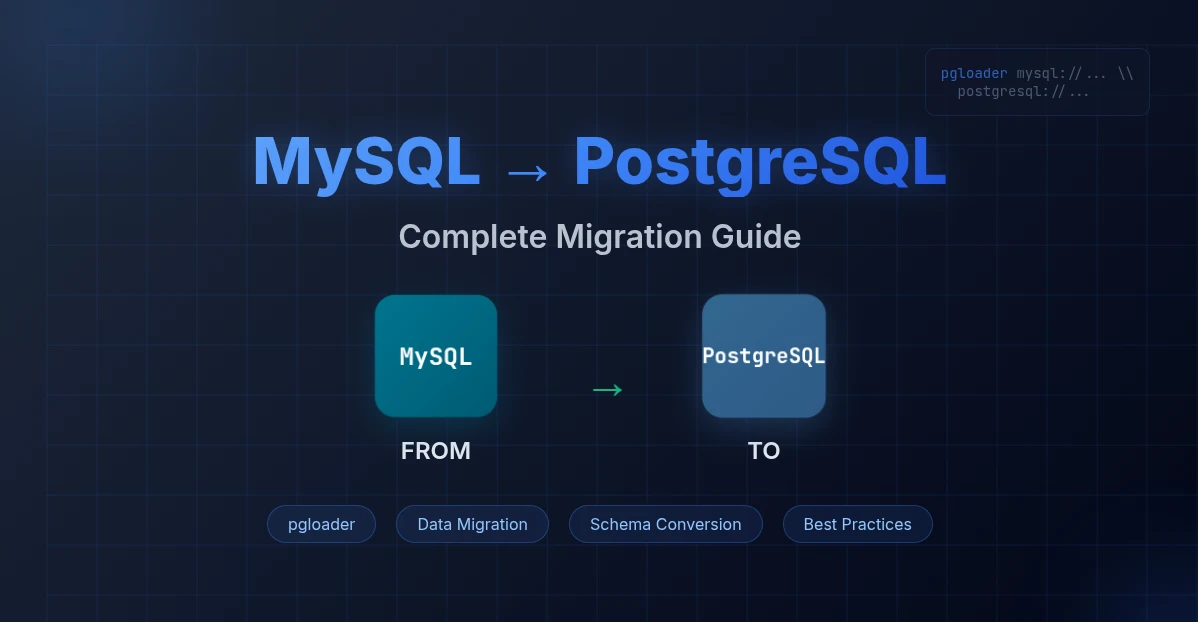

Database migration is one of those tasks that can either go smoothly or turn into a nightmare depending on your preparation. If you’re considering migrating from MySQL to PostgreSQL, you’re making a smart choice – PostgreSQL offers superior data integrity, better JSON support, advanced indexing, and robust ACID compliance. However, the migration process requires careful planning and understanding of the differences between these two database systems.

In this comprehensive guide, I’ll walk you through the entire migration process, common pitfalls, and practical solutions based on real-world experience.

Why Migrate from MySQL to PostgreSQL?

Before diving into the technical aspects, let’s quickly cover why you might want to make this switch:

- Better data integrity: PostgreSQL has stricter data validation and better constraint enforcement

- Advanced features: Superior JSON/JSONB support, array types, window functions, and CTEs

- Standards compliance: More faithful implementation of SQL standards

- Extensibility: Rich ecosystem of extensions and custom data types

- Concurrency: Better handling of concurrent transactions with MVCC

Understanding the Key Differences

Data Types

One of the biggest challenges in migration is handling data type differences:

| MySQL | PostgreSQL | Notes |

|---|---|---|

AUTO_INCREMENT |

SERIAL or BIGSERIAL |

PostgreSQL uses sequences |

TINYINT(1) |

BOOLEAN |

MySQL’s boolean equivalent |

DATETIME |

TIMESTAMP |

Similar but different precision handling |

TEXT |

TEXT |

Generally compatible |

VARCHAR(255) |

VARCHAR(255) |

Same, but PostgreSQL has no practical limit |

Case Sensitivity

PostgreSQL is case-sensitive by default, while MySQL is generally case-insensitive. This affects:

- Table and column names

- String comparisons

- Index usage

SQL Syntax Differences

- Quotes: MySQL uses backticks

`, PostgreSQL uses double quotes" - LIMIT syntax: MySQL supports

LIMIT offset, count, PostgreSQL usesLIMIT count OFFSET offset - Date functions: Different function names and syntax

Migration Methods

Method 1: pgloader (Recommended)

pgloader is a specialized tool designed specifically for database migrations. It handles most conversion issues automatically.

Installation:

# Ubuntu/Debian

sudo apt-get install pgloader

# macOS

brew install pgloader

# Or download from GitHub releases

Basic usage:

pgloader mysql://user:password@localhost/source_db postgresql://user:password@localhost/target_db

Advanced configuration with type casting:

LOAD DATABASE

FROM mysql://username:password@localhost/source_database

INTO postgresql://username:password@localhost/target_database

WITH include drop, create tables, create indexes, reset sequences

CAST type int to bigint,

type integer to bigint,

type mediumint to bigint,

type smallint to bigint,

type tinyint when (= precision 1) to boolean using tinyint-to-boolean

ALTER SCHEMA 'source_database' RENAME TO 'public';

Save this as migration.load and run:

pgloader migration.load

Method 2: Manual Export/Import

For more control over the process:

Step 1: Export from MySQL

mysqldump -u username -p --single-transaction --routines --triggers source_database > mysql_dump.sql

Step 2: Convert syntax

You’ll need to modify the dump file to handle:

- Quote character differences

- Data type conversions

- Function name changes

- SQL dialect variations

Step 3: Import to PostgreSQL

psql -U username -d target_database -f converted_dump.sql

Method 3: ETL Tools

For large-scale migrations, consider:

- AWS Database Migration Service (DMS): Great for cloud migrations

- Pentaho Data Integration: Open-source ETL with visual interface

- Talend: Enterprise-grade data integration platform

Common Issues and Solutions

Foreign Key Constraint Errors

Problem:

Database error 42804: foreign key constraint "answers_question_id_foreign" cannot be implemented

DETAIL: Key columns "question_id" and "id" are of incompatible types: numeric and bigint.

Solution:

Configure pgloader to cast all integer types consistently:

CAST type int to bigint,

type integer to bigint,

type mediumint to bigint,

type smallint to bigint

Duplicate Key Errors

Problem:

ERROR Database error 23505: duplicate key value violates unique constraint "idx_17514_primary"

DETAIL: Key (id)=(1) already exists.

Solutions:

- Truncate target tables:

TRUNCATE TABLE table_name CASCADE;

- Use pgloader with truncate option:

WITH data only, truncate, reset sequences

- Reset sequences after import:

SELECT setval(pg_get_serial_sequence('table_name', 'id'),

(SELECT MAX(id) FROM table_name));

Schema-Related Issues

If your application uses a specific schema (common in Laravel applications):

Check current schema:

SELECT schemaname, tablename

FROM pg_tables

WHERE schemaname NOT IN ('information_schema', 'pg_catalog');

Drop specific schema:

DROP SCHEMA schema_name CASCADE;

CREATE SCHEMA schema_name;

GRANT ALL ON SCHEMA schema_name TO username;

Framework-Specific Considerations

Laravel Applications

Laravel applications often create tables in custom schemas. Check your config/database.php:

'connections' => [

'pgsql' => [

// ... other config

'search_path' => 'your_schema_name',

// or

'schema' => 'your_schema_name',

],

]

Ensure your migration targets the correct schema:

ALTER SCHEMA 'source_database' RENAME TO 'your_laravel_schema';

Other Frameworks

- Django: Check

DATABASESsetting insettings.py - Rails: Look at

database.ymlconfiguration - Symfony: Check

doctrine.yamlor.envdatabase URL

Step-by-Step Migration Process

1. Preparation

Backup everything:

# MySQL backup

mysqldump -u username -p --all-databases > mysql_full_backup.sql

# PostgreSQL backup (if you have existing data)

pg_dumpall -U username > postgresql_backup.sql

Analyze your schema:

-- In MySQL, check all tables and their relationships

SELECT

TABLE_NAME,

COLUMN_NAME,

DATA_TYPE,

IS_NULLABLE,

COLUMN_DEFAULT

FROM INFORMATION_SCHEMA.COLUMNS

WHERE TABLE_SCHEMA = 'your_database'

ORDER BY TABLE_NAME, ORDINAL_POSITION;

2. Setup Target Database

-- Create database

CREATE DATABASE target_database OWNER your_username;

-- Create necessary schemas

CREATE SCHEMA IF NOT EXISTS your_schema;

GRANT ALL ON SCHEMA your_schema TO your_username;

3. Migration Execution

Option A: Full migration with pgloader

pgloader --with "include drop, create tables, create indexes, reset sequences"

mysql://user:pass@localhost/source_db

postgresql://user:pass@localhost/target_db

Option B: Schema first, then data

# First, migrate schema only

pgloader --with "schema only" mysql://... postgresql://...

# Review and modify schema if needed

# Then migrate data

pgloader --with "data only" mysql://... postgresql://...

4. Post-Migration Tasks

Verify data integrity:

-- Check row counts

SELECT

schemaname,

tablename,

n_tup_ins as "rows"

FROM pg_stat_user_tables

ORDER BY schemaname, tablename;

Update application configuration:

- Database connection strings

- Query syntax (if using raw SQL)

- Data type handling in your code

Test thoroughly:

- Run your application’s test suite

- Verify all CRUD operations

- Check complex queries and reports

- Test user authentication and permissions

Performance Optimization Post-Migration

Analyze and Vacuum

-- Analyze all tables for query planner

ANALYZE;

-- Vacuum to reclaim space and update statistics

VACUUM ANALYZE;

Index Optimization

-- Find missing indexes

SELECT schemaname, tablename, attname, n_distinct, correlation

FROM pg_stats

WHERE schemaname = 'your_schema'

ORDER BY n_distinct DESC;

Connection Pooling

Consider implementing connection pooling with tools like:

- PgBouncer

- Pgpool-II

- Application-level pooling

Troubleshooting Common Problems

Character Encoding Issues

-- Check database encoding

SELECT datname, encoding FROM pg_database WHERE datname = 'your_database';

-- If needed, recreate with correct encoding

CREATE DATABASE new_database WITH ENCODING 'UTF8';

Permission Problems

-- Grant necessary permissions

GRANT ALL PRIVILEGES ON DATABASE your_database TO your_user;

GRANT ALL ON SCHEMA your_schema TO your_user;

GRANT ALL ON ALL TABLES IN SCHEMA your_schema TO your_user;

Application Compatibility

- Update database drivers to PostgreSQL versions

- Modify ORM configurations

- Update connection pooling settings

- Review and update raw SQL queries

Migration Checklist

Pre-Migration:

During Migration:

Post-Migration:

Conclusion

Migrating from MySQL to PostgreSQL can be straightforward with the right tools and preparation. pgloader handles most of the heavy lifting, but understanding the differences between the two database systems is crucial for a successful migration.

The key to success is thorough testing. Don’t just check that the migration completed without errors – verify that your application works correctly with the new database, perform load testing, and ensure all features function as expected.

Remember that migration is not just a technical process but also an opportunity to review and optimize your database design. Take advantage of PostgreSQL’s advanced features to improve your application’s performance and reliability.

With careful planning and execution, your migration to PostgreSQL will provide a solid foundation for your application’s future growth and development.

Have you completed a MySQL to PostgreSQL migration? Share your experiences and additional tips in the comments below.

In case you have found a mistake in the text, please send a message to the author by selecting the mistake and pressing Ctrl-Enter.