Neural networks are the fundamental components of deep learning techniques, and these are the most important topics in this field. Deep learning is a sub-branch of machine learning, and it deals with the training of the machine without any coding or supervision. In this article, we will discuss the basic types of neural network algorithms in deep learning and understand how they work. There are multiple types of neural network algorithms, and we will try to cover the most common types that all students must know.

Introduction to Neural Network

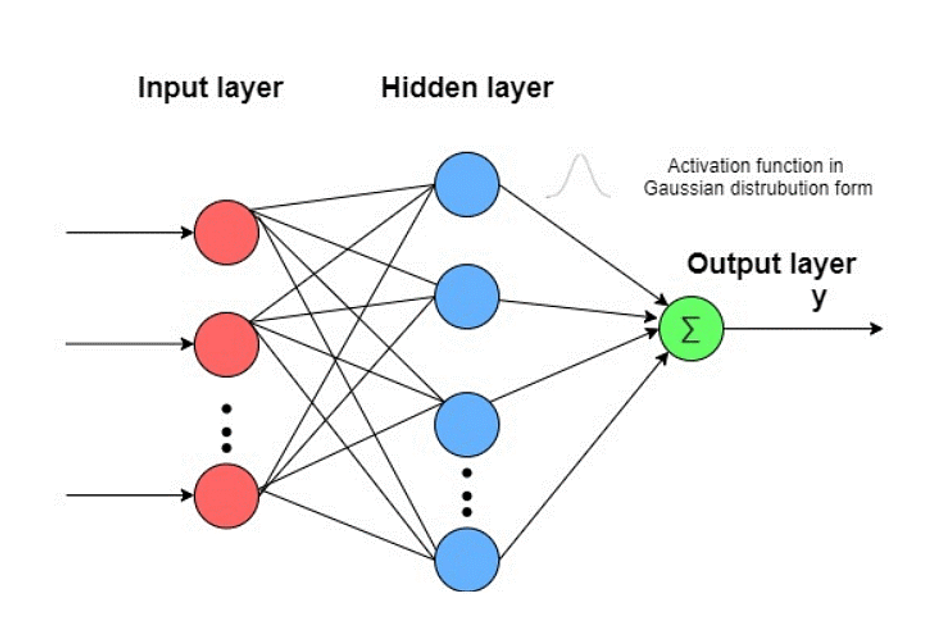

The neural networks are inspired by the workings of human brains, where the neurons are distributed in a specific pattern and transfer and process information from one part of the brain to the other. A neural network has three types of layers:

- Input layer

- Output layer

- Hidden layer

The number of hidden layers is important because they determine the complexity and performance of the neural network. The weights are assigned to the neuron according to the type of neural network. As a result, the neuron gets the input data, which is a multiplied version of the original data. These neurons are designed and trained according to different cases, passing this information on to the next neuron. These networks are designed in such a way that they learn by comparing the input and output values. The error is then calculated, and if required, the data is sent to the first layer of the neuron to perform the same calculations and refine the results.

Types of Neural Network Algorithms

There are multiple types of neural networks, and all of them are designed for a specific type of task. This gives versatility to the field of deep learning and as a result, there is a great scope of neural networks in all technical and common fields of life. Here are some important types of neural networks that play a vital role in the implementation of deep learning:

Convolutional Neural Networks

The convolutional neural network (also known as CNNs and ConvNets) was first designed by Yann LeCun in 1988 and he called it LeNet. In the start, it was used to recognise zip codes and other digits successfully but the multiple studies on these networks resulted in advanced applications of this network such as image recognition and object detection.

Layers in CNNs

The CNNs are accurate because of the fine structure of layers that automatically learn and provide the best predictions and outputs related to complex data. Here are the main layers of CNNs:

- Convolution layer

- Rectified linear unit

- Pooling layer

- Fully connected layers

The data, when passed through the specialized structure of this network, is filtered out, and CNNs provide the results.

Kohonen’s Self-Organizing Feature Maps

They were developed by Teuvo Kohonen in the 1980s and are also known as the self-organizing feature maps (SOFM). The neurons in the SOFM are arranged in the form of a grid, which helps organize them better. These maps organized themselves automatically during the training, and as a result, these networks provide a great arrangement of similar neurons in the grid and great accuracy.

The following are the main features of Kohonen’s Self-organizing Neural Network that make it useful in multiple fields:

- Topology preservation

- Grid-like structure

- Competitive learning

These networks reduce the complexity of the data by converting it from high dimensions to low dimensions. The dimensions of the data decrease without any data loss, and the processing of this data is easier as compared to the raw data. The organization of the data in different groups also results in better output than some other neural networks. Here are the five basic stages of the SOFM:

- Initialization

- Sampling

- Matching

- Updating

- Continuation

These steps make the data simple, easy to read, and easy to process.

Long Short Terms Memory Networks

These are known as LSTMs and are types of recurrent neural networks (RNNs). These have a memory; therefore, they can learn from the previous calculations and make the results more accurate by comparing different parameters. It has four layers that are arranged in a chain-like structure so that these layers may interact with each other and calculate the output by using the memorized structure. Here is an overview of the workings of LSTM:

- The layers are designed in such a way that they forget the irrelevant or unnecessary information and filter the useful information from it.

- These use multiple functions to actively update the cell state of the individual.

- The output is refined, and accurate results are obtained in the end.

Generative Adversarial Networks (GANs)

The scope of GANs has increased with time because they can be implemented on low-quality videos to make them high-resolution videos with the best features. These networks consist of two basic parts:

- Generator

- Discriminator

The generator creates new data that resembles the older one, which is called fake data. On the other hand, the discriminator learns from this false data. The following cycle of processes occurs during the learning process:

- The GAN sends the data to the generator and discriminator

- The generator produces fake data

- The discriminator distinguishes between real and fake data and learns from it

The whale process repeats, and as a result, the network trains itself by creating fake data and comparing it with real data. As a result, this automatic learning provides accurate and high-quality outputs.

Other Neural Networks Algorithms

The list of neural network algorithms does not end here. There are a lot of neural networks that are behind the popularity of neural networks and deep learning. Here are some other types of neural network algorithms that you must know:

- Recurrent neural networks (RNN)

- Radial Basis Function Networks (RBFNs)

- Multilayer Perceptrons (MLPs)

- Deep Belief Networks (DBNs)

- Restricted Boltzmann Machines (RBMs)

- Autoencoders

Hence, we have seen the types of neural network algorithms that are playing an important role in the popularity of deep learning. We started with the basic introduction of the neural network algorithms and then moved towards the types. In the end, we have simply seen the names of the remaining neural networks. This is not the whole list because, people are working on multiple neural networks, and there are many classifications of the networks according to their functions. I hope it was a useful study for you.

In case you have found a mistake in the text, please send a message to the author by selecting the mistake and pressing Ctrl-Enter.